When I started with Unix, it was during my college days on a VT-100 terminal, with text command lines. There was even an online chat window using text (remember “talk”?). When a GUI was introduced using X Windows on Sun Microsystem Solaris machines, the experience was so different and it was considered to improve productivity because we get to multitask. However, old habits die hard, so even with a GUI, I would have dedicated X-Term windows for command line stuff. I would run “screen” (aka “Gnu Screen”) to have multiple (and switchable) windows within X-Term.

The advantages of using screen are:

- When my SSH connection is broken, the command line sessions are still working. Useful when running shell scripts that take a long time to complete.

- Having a shell with command line history, I could review the previous executions, in case I forgot to document something.

- Instead of using the mouse to click on a different window, I use the keyboard shortcut Ctrl-A and the number keys, to switch between screens. Way quicker.

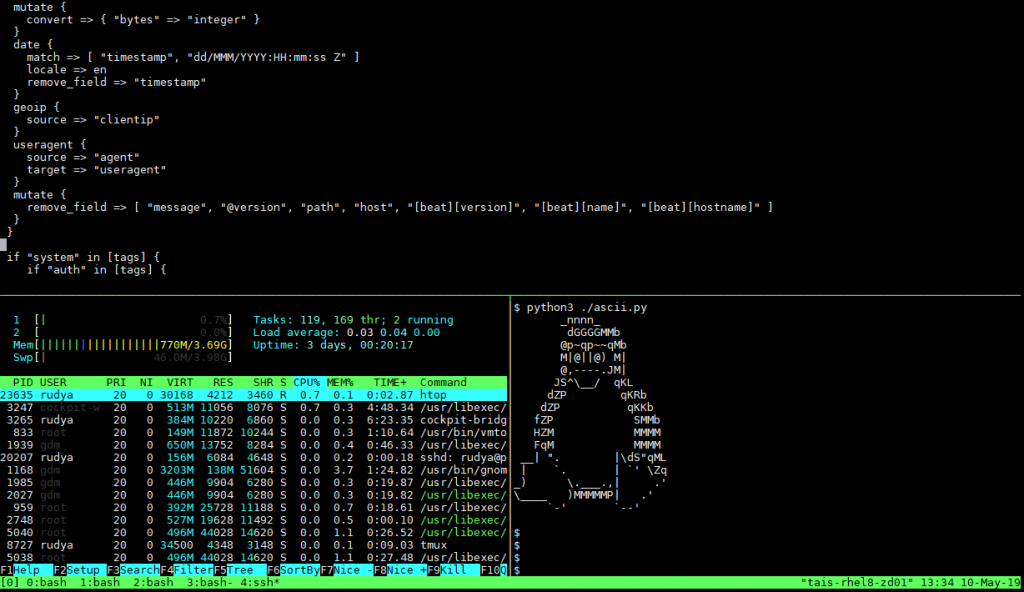

With the introduction of Red Hat Enterprise Linux 8, I was introduced (read: forced) to use a new screen replacement called TMUX. Apparently, it’s not a new util but it’s way more powerful – and useful. After using it for a while, I saw these advantages:

- Having a vendor managed Firewall, I didn’t have a choice for connection keep-alives. My SSH connections will drop after inactivity. With TMUX, there’s a clock display that forces the connection to send data once a minute. Thus keeping the connection alive – indefinitely. No more dropped connections and reconnecting effort.

- Being able to run screen within TMUX window is pretty nifty. I have another layer of switchable window, which is really handy when I have multiple servers representing the different layers for a site (ie. web, JBOSS, database, etc.) This is possible because TMUX’s key bindings for switching window is configurable and by default it’s different than screen’s.

- TMUX has window panes, for dashboard like monitoring. Plus, it looks awesome!

Most of the Red Hat Enterprise Linux I’m working with is version 6.x, TMUX is not included as part of RHN repository. Thus, I had to build it from source. These are the steps to do it:

- Download, compile, and install the latest libevent and ncurses.

- Download TMUX and compile using the following configure flags (note, I installed on local home directory):

CFLAGS="-I$HOME/local/include -I$HOME/local/include/ncurses" LDFLAGS="-L$HOME/local/lib -L$HOME/local/include/ncurses -L$HOME/local/include" CPPFLAGS="-I$HOME/local/include -I$HOME/local/include/ncurses"

If there’s a doubt that command line is important to a sysadmin’s daily work, Microsoft Developers are proud to present an expanded version of Windows OS command prompt. The video below has the full highlights and it looks great!

There’s even a trailer that rivals an iPhone launch commercial!

I’m excited that Operating System vendors are now providing more robust terminal tools, making command line a much better experience for all of IT folks!