The IT Infrastructure Library (ITIL) is the de-facto standard around the world as a guide for IT professionals to manage IT services. It is designed to be customer focused, quality driven, and economical. It evolved from a standard defined in Great Britain by their Central Computing and Telecommunications Agency (CCTA) back in the 80’s. The British government needed a unified standard to improve the quality of IT services they had received. The result was this compilation of best practices, and it’s being used by other IT organizations around the world.

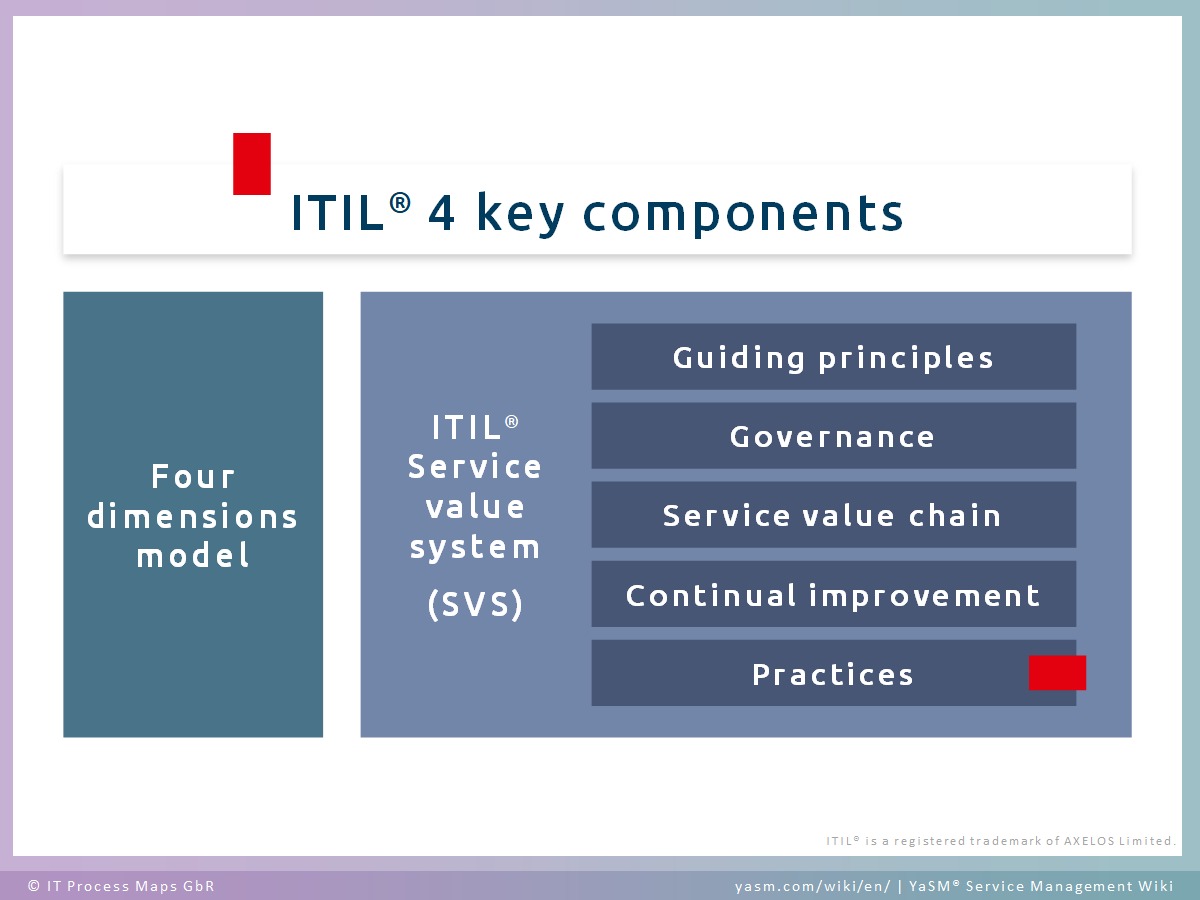

After several iterations, the ITIL standard is now on version 4, published in February 2019. The key components of ITIL 4 are the four dimensions model of service agreement, and the ITIL Service Value System (SVS), illustrated in the diagram below:

The four dimensions model are:

- Organizations and People

- Information and Technology

- Partners and Suppliers

- Value Streams and Processes

More detailed explanation of the model and components are in the YASM Wiki site.

For IT System Administrators and Managers, ITIL 4 framework has a focus on overseeing the infrastructure and platforms used by organization that enables monitoring of available technology solutions. It includes a model to manage vendors providing Software as a Service (SaaS) and cloud computing environments, as a way of flexible and on-demand expansion. It outlines requirements for configurable computing resources and network access.

The framework of ITIL 4 for IT infrastructure and platform management, stem from the following:

- IT Infrastructure components such as physical (ie. Dell, HP, Sparc servers), or virtual (ie. VMWare, Citrix, AWS), including the technologies used behind-the-scene, such as storage, network, Middleware applications (ie. JBOSS, Elasticsearch), and Operating Systems (ie. Linux, Windows).

- Develop an implementation and administration strategy for infrastructure or platform that is unique for the organization, to fulfill the business and technical requirements.

- Design communication methods between the organization’s own systems (cloud or on-premise), as well as to vendors in a secure and efficient way.

Of course, the above key concepts are an over simplification of the actual implementation. Running a data center is difficult and can be costly. Software is not perfect and fails without proper maintenance. Cybersecurity is complex, both in social and technological contexts. Technology keeps changing that will require constant training of the IT staff to keep up to date.

These are some of the reason why guidelines like ITIL 4 is valuable for IT Managers and Directors to be familiar with. It serves as a starting point to build IT infrastructure and platforms. They need to apply the practice on their own organization. With proper investment, going through many deployment iterations and lessons learned, an organization will be able to achieve the desired stability and security required.